The line between reality and fabrication is blurring. The age of AI and deepfakes is moving forward at a spectacular pace. ChatGPT 4’s website gets 1.5 billion visitors a month, and ChatGPT 5, an order of magnitude more capable, will be released in about a year. AI has revolutionized the entertainment industry and opened up new avenues for criminals. One high-profile instance is the use of AI voice cloning for vishing attacks.

A victim receives a frantic phone call: Mom is on a vacation trip, and she’s frantic. Her voice, laced with fear and desperation, informs you that she's been detained in a foreign country. To secure her release, she needs money transferred to an overseas account immediately...

Overwhelmed by shock and concern, you act instinctively. Without questioning the call's authenticity, you proceed with the wire transfer to get her out. It is a vishing scam that costs even technically adept, well-informed victims significant sums of money.

The voice on the other end sounded just like hers. The caller ID might have displayed something like “Police Unit.” Everything seemed so real, so urgent.

This is the dystopian world we’re entering, where everyday scam calls are tailored and engineered like a heist out of Mission: Impossible. And it’s all so easy for perpetrators.

In this article, we outline three cases where voice cloning is used.

AI voice cloning, a product of artificial intelligence, enables the creation of realistic replicas of human voices by training a tool (cheap and easy to find) with a few seconds of a voice sample. The app can capture the voice from posts on social media.

AI analyzes as little as three seconds of audio and replicates the unique vocal patterns, intonation, and speech cadence.

Once trained, it synthesizes new audio content that mimics the target individual's voice, making it virtually indistinguishable from the real person. The similarity is remarkable.

Vishing, a combination of “voice” and “phishing,” is a social engineering attack that utilizes voice calls to deceive individuals into revealing sensitive information or transferring funds. It exploits the power of human empathy by impersonating trusted individuals (family members, bank representatives, or law enforcement officials).

AI voice cloning has elevated vishing attacks to a new level. Scammers can easily replicate voices and seamlessly bypass traditional authentication methods, convincing victims that the conversation comes from a trusted source.

The audio quality is jaw-dropping…from a viral video impersonating a celebrity…to a $35 million heist. The implications of this technology are so far-reaching that employees should be reminded often about how it works.

In 2023, a deepfake video surfaced online featuring podcaster Joe Rogan endorsing a male libido booster supplement. The video, meticulously crafted using AI voice cloning, was so convincing that it fooled many viewers.

BREAKING: This AI deepfake video rendering of Joe Rogan is going viral on TikTok. This is the start of massive waves of new scams & misinformation!

— MachineAlpha ⭕️ (@Machine4lpha) February 13, 2023

If only there was a decentralized GPU compute/rendering network that can authenticate provenance for IP. $RNDR https://t.co/m5WQvJjEuF pic.twitter.com/VqtQgEFcyJ

In a podcast episode from October, Rogan addressed the issue of deepfakes and said that he was "disappointed" that his likeness had been used in a fraudulent video. He also warned his listeners to be wary of such videos and to do their research before believing anything they see online.

This incident highlights the potential misuse of AI voice cloning using celebrities to spread misinformation and promote fraudulent products or services.

In a case exposed by Red Goat, a group of cybercriminals employed AI voice cloning to perpetrate a multi-million dollar heist.

Although victims’ names were undisclosed, it is known that the Ministry of Justice of the United Arab Emirates submitted a request for assistance from the Criminal Division of the U.S. Department of Justice.

According to court documents, the victim company's branch manager received a phone call from someone claiming to be from the company headquarters. The caller's voice was so similar to a company director's that the branch manager believed the call was legitimate.

Using voice and email, the caller informed the branch manager that the company was about to make an acquisition and that a lawyer named “Martin Zelner” had been authorized to oversee the process.

The manager received multiple emails from Zelner regarding the acquisition, including a letter of authorization from the director to Zelner. As a result of these communications, when Zelner requested that the branch manager transfer $35 million to various accounts as part of the acquisition, the branch manager complied.

In 2023, a particularly disturbing vishing tactic has emerged, utilizing AI to mimic the voices of distressed loved ones. Scammers would contact victims, impersonating their children or other family members, claiming to be in dire need of financial assistance due to an arrest or medical emergency.

These scams are becoming increasingly popular because it is so easy to train AI voice models.

This case underscores the emotional manipulation employed by vishing scammers, preying on victims' vulnerabilities and exploiting their desire to help loved ones in distress.

Have you noticed an increase in spam and scam calls lately?

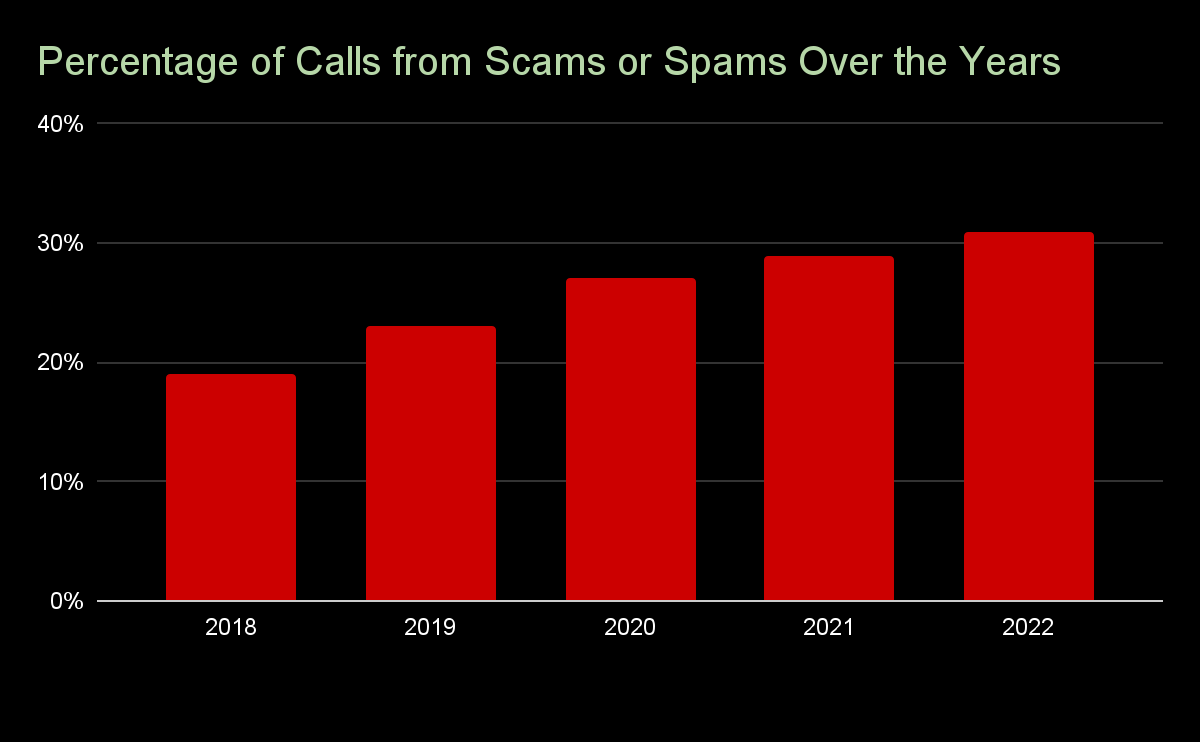

You have. According to a 2022 report by the app Truecaller, the number of spam and scam calls in the US has risen steadily over the past years. Last year alone, 31% of all calls received by US residents were from one of the two, up from 18% in 2018.

(alt text: graph showing the increase in scam and spam calls over the past 5 years)

Another finding of the Truecaller report was that younger Americans are more susceptible to scam calls than their older counterparts: in 2021, 41% of Americans aged 18-24 received a scam call, compared to 20% of Americans aged 65 and over.

Factors like the rise of robocalls, the availability of cheap calling technology, and the increasing sophistication of scam techniques powered by AI have made it easier for criminals to adopt this type of attack.

And the implications of AI voice cloning extend far beyond financial losses. The attacks can cause significant emotional distress, shame, damage reputations, and undermine public trust in institutions and individuals.

As a countermeasure, cybersecurity awareness professionals should continuously educate employees about vishing tactics and empower individuals with the knowledge and tools to protect themselves from these deceptive calls.

For your employees, here are measures to keep themselves, their employer, and their family members:

CISOs and cybersecurity teams are under tremendous pressure and must compete for qualified employees. While technical tools exist to test and track employees’ cybersecurity prowess, the key to engaging employees isn’t automation.

It’s actionable videos, quizzes, infographics, the latest cyber news, and answers to common questions written in a style they can understand and share with their families.

Aware Force delivers that service year-round. It’s easy to use and cost-effective. And everything we deliver is branded and customized, so all the content comes from your company’s IT team.

Aware Force generates unsolicited praise from employees and fierce loyalty from our customers. Check out our extensive cyber library and our awesome twice-monthly cybersecurity newsletter — all branded and tailored for you.